Update December 12, 2023 at 12:05PM PT:

Our next-generation Ray-Ban Meta smart glasses include Meta AI for an integrated hands-free, on-the-go experience. You can use Meta AI to spark creativity, get information and control the glasses just by using your voice. Today we’re introducing new updates to make the glasses smarter and more helpful than ever before.

We’re launching an early access program for Ray-Ban Meta smart glasses customers to try out and provide feedback on upcoming features ahead of their release. Starting today, customers who have opted in to the program will have access to a new test of multimodal AI-powered capabilities. You won’t just be able to speak with your glasses — the glasses will be able to understand what you’re seeing using the built-in camera. You can ask Meta AI for help writing a caption for a photo taken during a hike or you can ask Meta AI to describe an object you’re holding.

As you try these new experiences, bear in mind that, as we test, these multimodal AI features may not always get it right. We’re continuing to learn what works best and improving the experience for everyone. Your feedback will help make Ray-Ban Meta smart glasses better and smarter over time. This early access program is open to Ray-Ban Meta smart glasses owners in the US. Those interested can enroll using the Meta View app on iOS and Android. Please make sure you have the latest version of the app installed and your smart glasses are updated as well.

In addition to the early access program, we’re beginning to roll out the ability for Meta AI on the glasses to retrieve real-time information powered in part by Bing. You can ask Meta AI about sports scores or information on local landmarks, restaurants, stocks and more. For example, you can say, “Hey Meta, who won the Boston Marathon this year in the men’s division?” or “Hey Meta, is there a pharmacy close by?” Real-time search is rolling out in phases to Ray-Ban Meta smart glasses customers in the US.

Learn more about multimodal generative AI systems on Meta AI.

Originally published on December 6, 2023 at 9:00AM PT:

It’s been an incredible year for AI at Meta. We introduced you to new AI experiences across our apps and devices, opened access to our Llama family of large language models, and published research breakthroughs like Emu Video and Emu Edit that will unlock new capabilities in our products next year. We can’t wait for what’s to come next year with AI advancements in content generation, voice and multimodality that will enable us to deliver new creative and immersive applications. Today, we’re sharing updates to some of our core AI experiences and new capabilities you can discover across our family of apps.

The Evolution of Meta AI

Meta AI is our virtual assistant you can access to answer questions, generate photorealistic images and more. We’re making it more helpful, with more detailed responses on mobile and more accurate summaries of search results. We’ve even made it so you’re more likely to get a helpful response to a wider range of requests. To interact with Meta AI, start a new message and select “Create an AI chat” on our messaging platforms, or type “@MetaAI” in a group chat followed by what you’d like the assistant to help with. You can also say “Hey Meta” while wearing your Ray-Ban Meta smart glasses.

And Meta AI is now helping you outside of chats, too. It’s doing some of the heavy lifting behind the scenes to make our product experiences on Facebook and Instagram more fun and useful than ever before. The large language model technology behind Meta AI is used to give people in various English-language markets options for AI-generated post comment suggestions and community chat topic suggestions in groups, serve search results, and even enhance product copy in Shops. It’s also powering an entirely new standalone experience for creative hobbyists called imagine with Meta AI.

Create and Riff on Images With Friends

One of Meta AI’s most commonly used features across our messaging apps is imagine, our text-to-image generation capability that lets you create and share images on the fly. We’re always looking for ways to make our AIs even more fun and social, so today we’re excited to add a new feature to Meta AI on Messenger and Instagram called reimagine. Here’s how it works in group chat: Meta AI generates and shares the initial image you requested, then your friend can press and hold on the picture to riff on it with a simple text prompt and Meta AI will generate an entirely new image. Now you can kick images back and forth, having a laugh as you try to one-up each other with increasingly wild ideas.

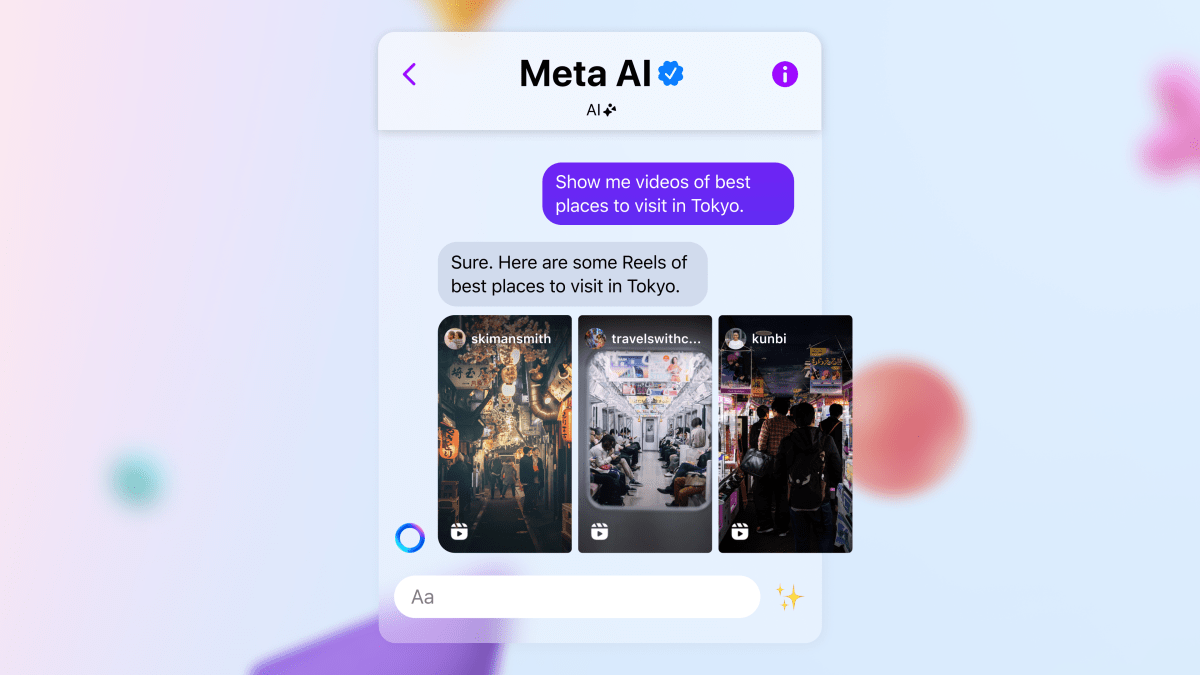

Discover New Experiences With Reels in Meta AI

Sometimes it’s not enough to simply read about something — you want to see it or experience it through more than text. Reels let you do just that. They’re a great way to discover new content, connect with creators and find inspiration. And now, we’re starting to roll out Reels in Meta AI chats, too.

Say you’re planning a trip to Tokyo with friends in your group chat, you can ask Meta AI to recommend the best places to visit and share Reels of the top sites to help you decide which attractions are must-sees.

This is just the first example of how we’ll build even deeper integrations across our apps to make Meta AI an even more connected and personal assistant over time.

Enhancing Your Experience on Facebook

We’re also continuing to innovate on Facebook to help people with everyday experiences, making expression and discovery easier than ever before.

With Meta AI under the hood, we’re exploring ways for you to use AI to help you create the perfect birthday greeting to share with your bestie, edit your own Feed posts, draft a clever introduction for your Facebook Dating profile or even set up a new Group.

We’re also testing ways to easily create and share AI-generated images on Facebook — like using Meta AI to convert images from landscape to portrait orientation so you can share them more easily to Stories.

We’re also testing using Meta AI to surface relevant information in Groups, so you don’t miss out on the conversations that matter most, and suggest topics for new chats, helping you stay active in your communities. On Marketplace, we’re testing using Meta AI to help people learn more about the products they’re considering buying and easily find related or alternative items. And we’re testing using Meta AI to improve our search capabilities for friends, Pages, Groups and Marketplace listings.

Helping Creators Respond to Their Fans

We want to give creators generative AI tools to help them work more efficiently and connect with more of their community. Building off our recent updates on Instagram, we’re starting to test suggested replies in DMs to help creators engage with their audiences faster and more easily. When creators in the test open a message in their DMs, Meta AI will work in the background to draft relevant replies for consideration based on their tone and content.

Experience Imagine With Meta AI

We’ve enjoyed hearing from people about how they’re using imagine, Meta AI’s text-to-image generation feature, to make fun and creative content in chats. Today, we’re expanding access to imagine outside of chats, making it available in the US to start at imagine.meta.com. This standalone experience for creative hobbyists lets you create images with technology from Emu, our image foundation model. While our messaging experience is designed for more playful, back-and-forth interactions, you can now create free images on the web, too.

Chatting With Our AIs

Meta AI isn’t the only AI we’re evolving. We’re also improving our other AIs based on your feedback. Search is coming to more of our AIs. Two of our sports-related AIs, Bru and Perry, have been serving up responses powered by Bing since day one. And now we’re rolling out this functionality to Luiz, Coco, Lorena, Tamika, Izzy and Jade, too.

In addition, we’re experimenting with a new feature for select AIs to add long-term memory, so what they learn from your conversation isn’t lost after your chat is over. That means you can return to a particular AI and pick up where you left off. Our goal is to bring the potential for deeper connections and extended conversational capabilities to your chats with AIs, including Billie, Carter, Scarlett, Zach, Victor, Sally and Leo. You can also clear your chat history with our AIs at any time. Check out our Generative AI Privacy Guide for more info.

To chat with our AIs, start a new message and select “Create an AI chat” on Instagram, Messenger or WhatsApp. They’re now available to anyone in the US.

Invisible Watermarking, Red Teaming and More to Come

We’re committed to building responsibly with safety in mind across our products and know how important transparency is when it comes to the content AI generates. Many images created with our tools indicate the use of AI to reduce the chances of people mistaking them for human-generated content. In the coming weeks, we’ll add invisible watermarking to the imagine with Meta AI experience for increased transparency and traceability. The invisible watermark is applied with a deep learning model. While it’s imperceptible to the human eye, the invisible watermark can be detected with a corresponding model. It’s resilient to common image manipulations like cropping, color change (brightness, contrast, etc.), screen shots and more. We aim to bring invisible watermarking to many of our products with AI-generated images in the future.

We’re also continuing to invest in red teaming, which has been a part of our culture for years. As part of that work, we pressure test our generative AI research and features that use large language models (LLMs) with prompts we expect could generate risky outputs. Recently, we introduced Multi-round Automatic Red-Teaming (MART), a framework for improving LLM safety that trains an adversarial and target LLM through automatic iterative adversarial red teaming. We’re working on incorporating the MART framework into our AIs to continuously red team and improve safety.

Finally, we continue to listen to people’s feedback based on their experiences with our AIs, including Meta AI.

We’re still just scratching the surface of what AI can do. Stay tuned for more updates in the new year.