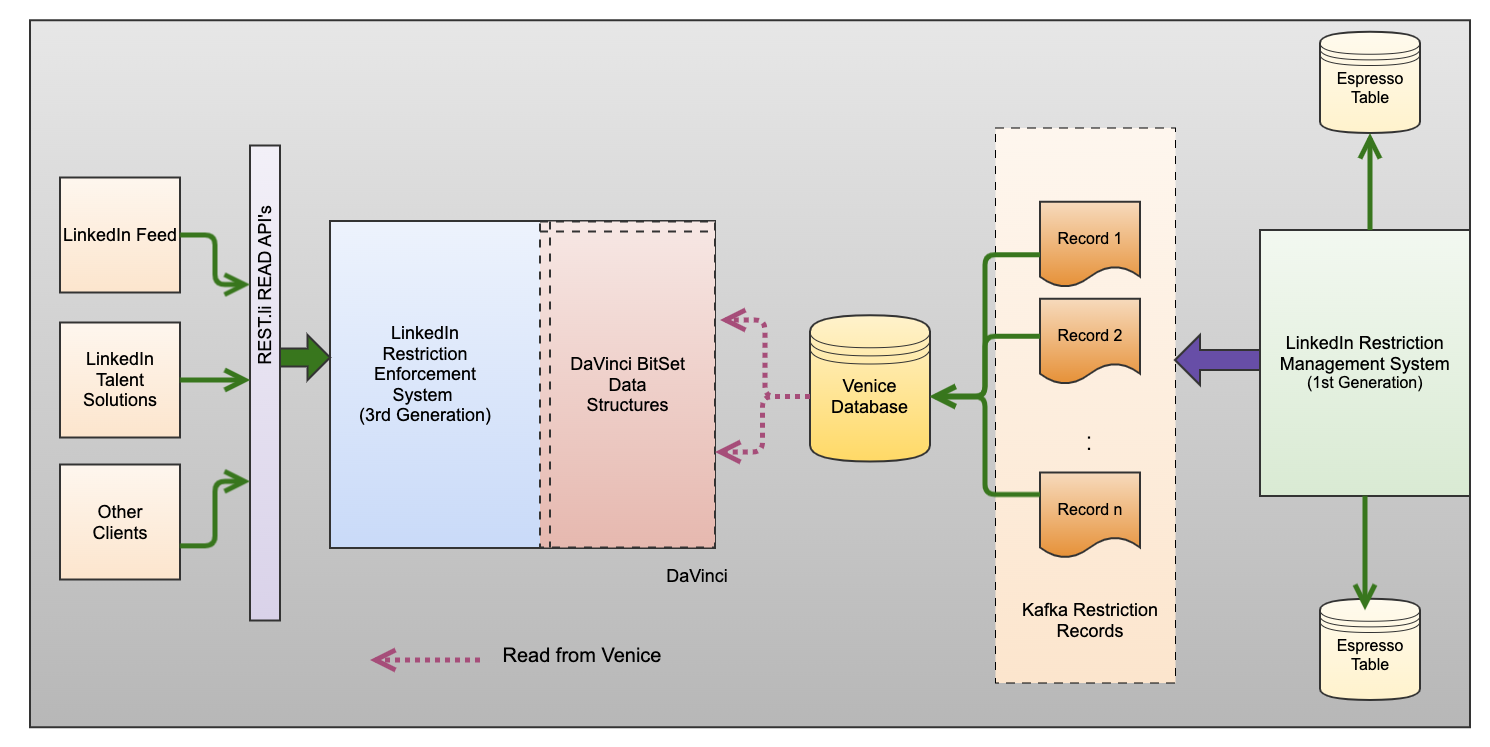

Figure 4. Client side cache with server side cache for restrictions data

Shortcomings: The introduction of dual layers, encompassing server-side and client-side caching, resulted in significant enhancements, particularly in scenarios with high cache-hit rates. While our caching initiatives successfully reduced latency and improved system responsiveness, they also brought forth new challenges that emphasized the importance of maintaining cache consistency. Periodic latency spikes become a concern, primarily due to the absence of distributed cache strategies within our existing setup and missing records. Our cache framework primarily operated in isolation at the application host level, a configuration that posed specific challenges related to consistency and performance.

In the light of these challenges, we wanted to refine our cache strategies and fortify our restriction enforcement, paving the way for innovative solutions capable of navigating the complexities. We had to think proactively and refine our cache strategies further.

Full refresh-ahead cache

In our quest for even more efficient restriction enforcement, we ventured into the realm of the full refresh-ahead cache, a concept designed to enhance our system’s performance further. The premise was intriguing – each client application host would diligently store all restriction data in its in-memory cache. This architecture was meticulously structured, with each client-side application host tasked with reading all the restriction records from our servers during every restart or boot-up. The objective was to ensure that every single member restriction record resided in the cache of each application host.

The advantages of this approach were clear. We witnessed a remarkable improvement in latencies, primarily because all member restriction data was readily available on the client side, sparing the need for network calls. We implemented a polling mechanism within our library to maintain cache freshness, enabling regular checks for newer member restriction records and keeping the client-side cache impeccably up-to-date.

Shortcomings: However, as with any mechanism, there were trade-offs. The full refresh-ahead cache approach posed its own set of challenges. Each client was burdened with maintaining a substantial in-memory footprint to accommodate the entire member restriction data. While the data size itself was manageable, the demands of client-side memory were significant. Moreover, this architecture introduced a burst of network traffic during application restarts or the deployment of new changesets, potentially straining our infrastructure.

Additionally, as the memory on the client side did not persist, performing a full refresh-ahead cache became a resource-intensive and time-consuming operation. Each host was required to undertake the same process, exerting substantial strain on our underlying Oracle database. This strain manifested in various ways, from performance bottlenecks and increased latencies to elevated CPU usage, imposing substantial operational overhead on our teams. The challenges persisted despite our efforts to fine-tune the Oracle database, including adding indexes and optimizing SQL queries.

Furthermore, the architecture’s Achilles’ heel lay in cache inconsistencies. Maintaining perfect synchronization between the cache and the server was a demanding task requiring precise data fetching and storage. Any failures, even after limited retries, could result in some records being missed, often due to factors like network failures or unexpected errors. These inconsistencies were a cause of concern, hinting at potential gaps in our ability to enforce all restrictions consistently.

Bloom-Filter

In our relentless pursuit of optimization, we ventured into the realm of Bloom-Filters (BF). BF proved to be a transformative addition to our toolkit, offering a novel approach to storing and serving restrictions at scale. Unlike conventional caching mechanisms, BF offered a unique advantage: they allowed us to determine, with remarkable efficiency, whether a given member restriction was present in the filter or not.

The brilliance of BF lies in their ability to represent large datasets of restrictions succinctly. Rather than storing the complete restriction dataset, we harnessed the power of BF to encode these restrictions in a compact and highly efficient manner. This meant that, unlike traditional caching, which could quickly consume substantial in-memory space, we could conserve valuable resources while maintaining rapid access to restriction information. The essence of a BF is its probabilistic nature; it excels in rapidly answering queries about the potential presence of an element, albeit with a small possibility of producing false positives. Capitalizing on this probabilistic quality, we could swiftly and accurately assess whether a member’s restriction was among the encoded data. We could proceed with the required restriction action when the BF signaled a positive match. This approach streamlined our system and allowed us to maintain a lean memory footprint, an essential consideration as our platform continued to scale to accommodate the needs of millions of LinkedIn members worldwide.

Shortcomings: The adoption of BF marked an enhancement in our pursuit of scalability. Instead of merely enlarging our memory capacity to accommodate an ever-expanding list of restrictions, we embraced an ingenious method that allowed us to efficiently manage and access these restrictions without the burden of excessive memory requirements. BF’s probabilistic nature introduced a trade-off: it provided incredibly fast queries but, in rare instances, might return a false positive. However, this was a minor concession for our use case, where rapid identification of restrictive conditions was paramount. BF became the linchpin of our scalable restriction enforcement strategy, delivering swift and precise results while optimizing resource allocation, making it a crucial addition to our evolving arsenal of tools and techniques.

Second Generation

LinkedIn’s remarkable growth brought both opportunities and challenges. As our member base expanded, we encountered operational complexities in managing a system that could efficiently enforce member restrictions across all product surfaces. System outages and inconsistencies became unwelcome companions on our journey. Faced with these challenges, we reached a crucial juncture that demanded a fundamental overhaul of our entire system.

Our journey towards transformation centered on a set of principles, each geared towards optimizing our system:

- Support high QPS (4-5 Million QPS): The need to handle restriction enforcement for every request across Linkedin’s extensive product offerings called for a system capable of sustaining a high QPS rate.

- Ultra low latency (<5 ms): Ensuring a swift response time, with latency consistently under 5 milliseconds, was imperative to uphold the member experience across all workflows.

- Five 9’s availability: We committed to achieving 99.999% availability, ensuring that every restriction was enforced without exception, safeguarding a seamless experience for our valued members.

- Low operational overhead: To optimize resource allocation, we aimed to minimize the operational complexity associated with maintaining system availability and consistency.

- Small delay in time to enforce: We set stringent benchmarks for minimizing the time it took to enforce restrictions after they were initiated.

With these principles are our North Star, we began to redesign our architecture from the ground up.