Today, we’re launching new generative AI features, and like other Meta products, we took steps to protect your privacy and offer greater transparency as we built these exciting new features. We developed privacy mitigations through our privacy review process and external engagements, and below are some helpful details on how we’ve trained our models, how interacting with our conversational AIs is different from interacting with friends or family, and where you can find more information about your privacy and our new generative AI features.

How do we train our generative AI models in a way that respects people’s privacy?

Earlier this year, we released our large language model, Llama 2, for academic and commercial purposes, and we shared specific details on how we trained the foundational models, which didn’t include Meta user data. The consumer generative AI features we launched today leverage the work we did to build our large language model, and we included additional privacy safeguards and integrity mitigations as we built new models and used information from our products and services.

Generative AI models take a large amount of data to effectively train, so a combination of sources are used for training, including information that’s publicly available online, licensed data and information from Meta’s products and services. For publicly available online information, we filtered the dataset to exclude certain websites that commonly share personal information. Publicly shared posts from Instagram and Facebook – including photos and text – were part of the data used to train the generative AI models underlying the features we announced at Connect. We didn’t train these models using people’s private posts. We also do not use the content of your private messages with friends and family to train our AIs. We may use the data from your use of AI stickers, such as your searches for a sticker to use in a chat, to improve our AI sticker models.

We use the information people share when interacting with our generative AI features, such as Meta AI or businesses who use generative AI, to improve our products and for other purposes. You can read more about the data we collect and how we use your information in our new Privacy Guide, the Meta AI Terms of Service and our Privacy Policy.

What happens with the information I send the generative AI features?

The AIs may retain and use information you share in a chat to provide more personalized responses or relevant information in that conversation, and we may share certain questions you ask with trusted partners, such as search providers, to give you more relevant, accurate, and up-to-date responses.

It’s important to know that we train and tune our generative AI models to limit the possibility of private information that you may share with generative AI features from appearing in responses to other people. We use automated technology and people to review interactions with our AI so we can, among other things, reduce the likelihood that models’ outputs would include someone’s personal information as well as improve model performance.

To give you more control, we’ve built in commands that allow you to delete information shared in any chat with an AI across Messenger, Instagram, or WhatsApp. For example you can delete your AI messages by typing “/reset-ai” in a conversation. Using a generative AI feature provided by Meta does not link your WhatsApp account information to your account information on Facebook, Instagram, or any other apps provided by Meta.

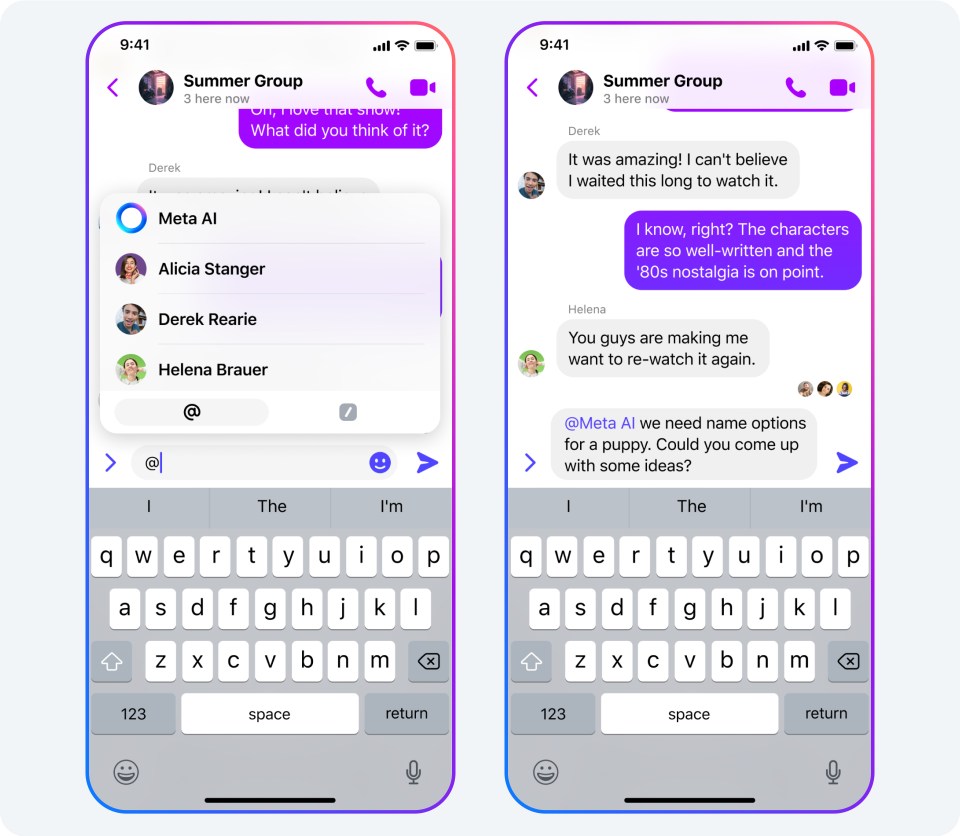

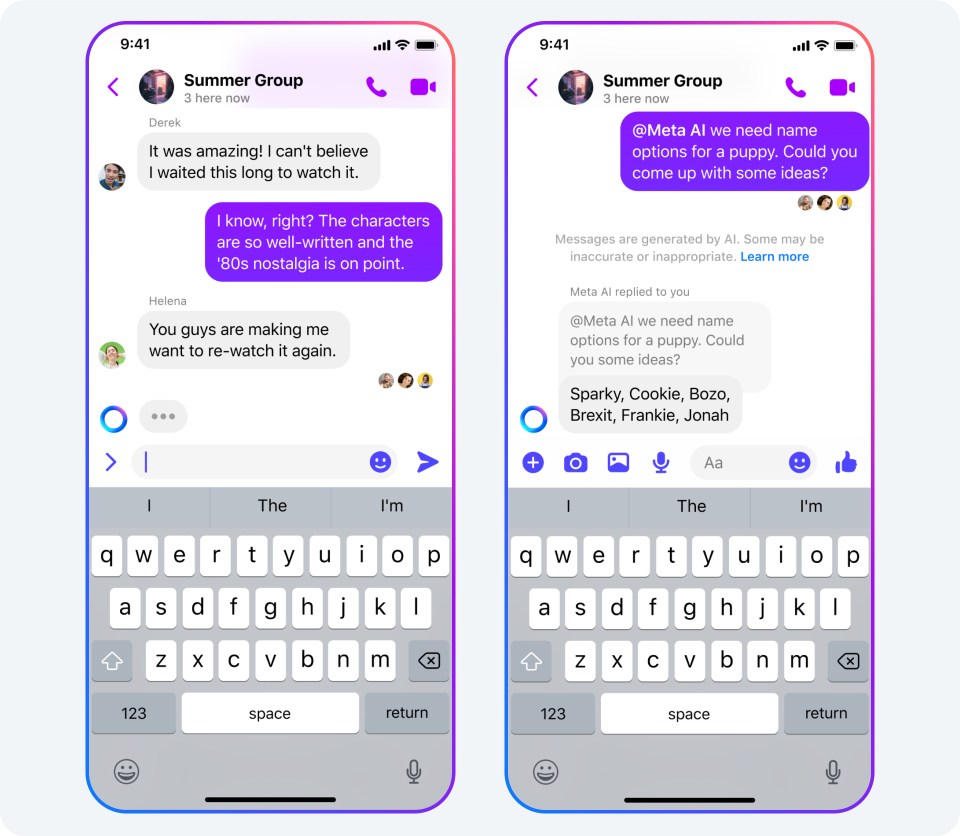

As Meta AI works today, it cannot be brought into the chat by Meta in WhatsApp, Instagram or Messenger, and can’t message you or your group first. In group chats with friends and family where someone asks Meta AI to join, this conversational AI only reads messages that invoke “@Meta AI” or messages where a reply invokes “@Meta AI.” Meta AI does not access other messages in the chat.

Where can I find more information about Meta’s generative AI features?

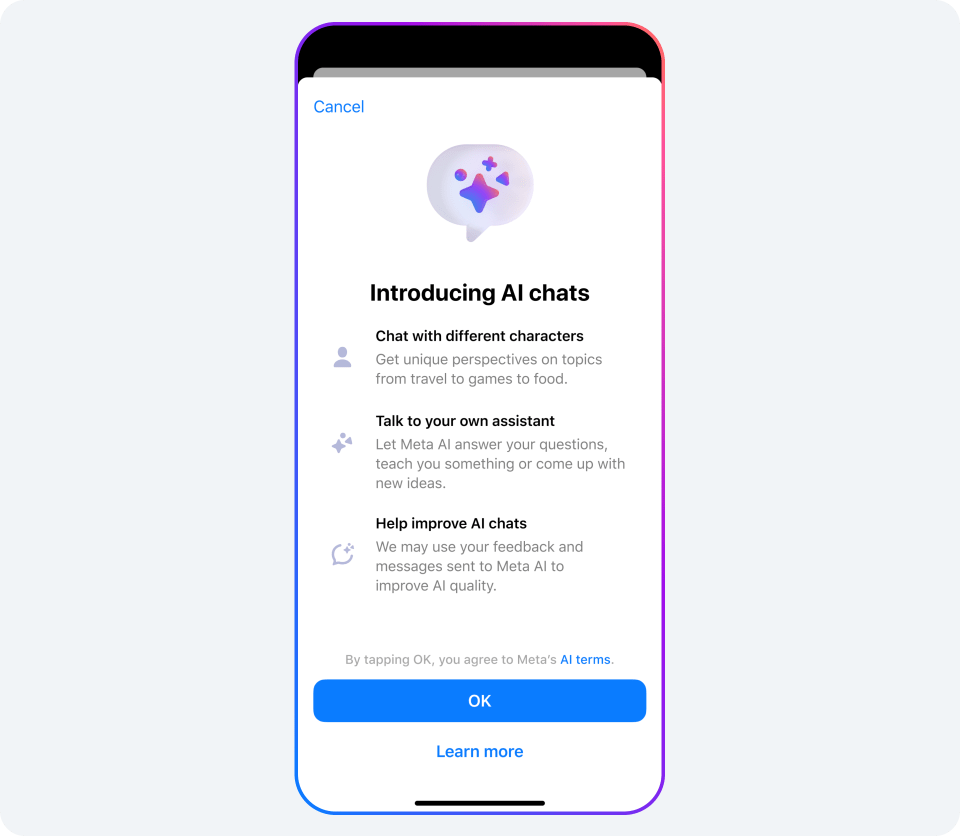

We built transparency into people’s interactions with our generative AI features and developed educational materials on generative AI and privacy. We crafted an introductory, in-product experience to help people understand generative AI features when they first engage with them. This includes setting expectations around the limitations of our generative AI features and offering links to additional, helpful information for those who want to learn more. For example, we let people know that our generative AI models may not have the most complete or recent information.

We also provide some in-product transparency tools that help people understand when someone is interacting with or seeing content created by our generative AI features. For example, photorealistic images created by Meta’s generative AI features include visual watermarks to make it clear that the image has been created with AI. We’ve published Help Center articles to help you understand how to interact with our generative AI features.

Our Newsroom post on building generative AI responsibly provides more detail on other safety measures for the generative AI features we’re launching at Connect. We also developed two Generative AI System Cards that can be found on Meta’s AI website and a transparency resource that provides more details on our generative AI model and features.

We have a responsibility to protect your privacy and have teams dedicated to this work for everything we build. Alongside industry peers, we’re participating in the Partnership on AI’s Synthetic Media Framework and we have signed onto the White House’s voluntary commitments for AI. These collaborations and our ongoing work with experts, policymakers, advocates and more help us improve the experiences of people interacting with our generative AI features. We’ll continue to build on these efforts and integrate additional privacy safeguards as we improve our generative AI technologies.