Sextortion is a horrific crime, where financially-driven scammers target young adults and teens around the world, threatening to expose their intimate imagery if they don’t get what they want. Today, we’re announcing new measures in our fight against these criminals – including an education campaign to raise awareness among teens and parents about how to spot sextortion scams, and what to do to take back control if they’re targeted by one of these scams. We’re also announcing significant new safety features to further help prevent sextortion on our apps.

A New Campaign to Help Teens and Parents Spot Sextortion Scams

As well as developing safety features to help protect our community, we want to help young people feel confident that they can spot the signs of a sextortion scam. That’s why we’ve worked with leading child safety experts, the National Center for Missing and Exploited Children (NCMEC) and Thorn – including Thorn’s Youth Advisory Council – to develop an educational video that helps teens recognize signs that someone may be a sextortion scammer. These red flags include coming on too strong, asking to trade photos, or suggesting to move the conversation to a different app.

“The dramatic rise in sextortion scams is taking a heavy toll on children and teens, with reports of online enticement increasing by over 300% from 2021 to 2023. Campaigns like this bring much-needed education to help families recognize these threats early. By equipping young people with knowledge and directing them to resources like NCMEC’s CyberTipline, and Take it Down, we can better protect them from falling victim to online exploitation.”

– John Shehan, a Senior Vice President from the National Center for Missing & Exploited Children

Embarrassment or fear can prevent teens from asking for help, so our video reassures teens that sextortion is never their fault, and explains what they can do to take back control from scammers. The video directs teens to instagram.com/preventsextortion, which includes tips – co-developed by Meta and Thorn – for teens affected by sextortion scams, a link to NCMEC’s Take It Down tool, which helps prevent teens’ intimate images being shared online, and live chat support from Crisis Text Line in the US.

We’ll be showing this video to millions of teens and young adults on Instagram in the US, UK, Canada and Australia – countries commonly targeted by sextortion scammers.

“Our research at Thorn has shown that sextortion is on the rise and poses an increasing risk to youth. It’s a devastating threat – and joint initiatives like this that aim to inform kids about the risks and empower them to take action are crucial.”

– Kelbi Schnabel, Senior Manager at Thorn

We’ve teamed up with creators teens love to help raise awareness of these scams while assuring them that sextortion is never their fault, and that there’s help available. Starting today, these creators will add their voices to the campaign by sharing educational content with their followers on Instagram.

We’re also partnering with a group of parent creators to help parents understand what sextortion is, how to recognize the signs of a sextortion scam and what steps to take if their teen becomes a victim of this crime. Creators will direct parents to helpful resources we developed with Thorn, including conversation guides.

New Safety Features to Disrupt Sextortion

In addition to this campaign, Meta is announcing a range of new safety features designed to further protect people from sextortion and make it even harder for sextortion criminals to succeed. These features complement our recent announcement of Teen Accounts, which gives tens of millions of teens built-in protections that limit who can contact them, the content they see and how much time they’re spending online. Teens under 16 aren’t able to change Teen Account settings without a parent’s permission.

With Instagram Teen Accounts, teens under 18 are defaulted into stricter message settings, which mean they can’t be messaged by anyone they don’t follow or aren’t connected to – but accounts could still request to follow them, and teens could choose to follow back. Now, we’re also making it harder for accounts showing signals of potentially scammy behavior to request to follow teens. Depending on the strength of these signals – which include how new an account is – we’ll either block the follow request completely, or send it to a teen’s spam folder.

Sextortion scammers often misrepresent where they live to trick teens into trusting them. To help prevent this, we’re testing new safety notices in Instagram DM and Messenger to let teens know when they’re chatting with someone who may be in a different country.

Sextortion scammers often use the following and follower lists of their targets to try and blackmail them. Now, accounts we detect as showing signals of scammy behavior won’t be able to see people’s follower or following lists, removing their ability to exploit this feature. These potential sextorters also won’t be able to see lists of accounts that have liked someone’s posts, photos they’ve been tagged in, or other accounts that have been tagged in their photos.

Soon, we’ll no longer allow people to use their device to directly screenshot or screen record ephemeral images or videos sent in private messages. This means that if someone sends a photo or video in Instagram DM or Messenger using our ‘view once’ or ‘allow replay’ feature, they don’t need to worry about it being screenshotted or recorded in-app without their consent. We also won’t allow people to open ‘view once’ or ‘allow replay’ images or videos on Instagram web, to avoid them circumventing this screenshot prevention.

After first announcing the test in April, we’re now rolling out our nudity protection feature globally in Instagram DMs. This feature, which will be enabled by default for teens under 18, will blur images that we detect contain nudity when sent or received in Instagram DMs and will warn people of the risks associated with sending sensitive images. We’ve also worked with Larry Magid at ConnectSafely to develop a video for parents, which will be available in the Meta Safety Center’s Stop Sextortion page, that explains how the feature works.

Providing More Support In-App

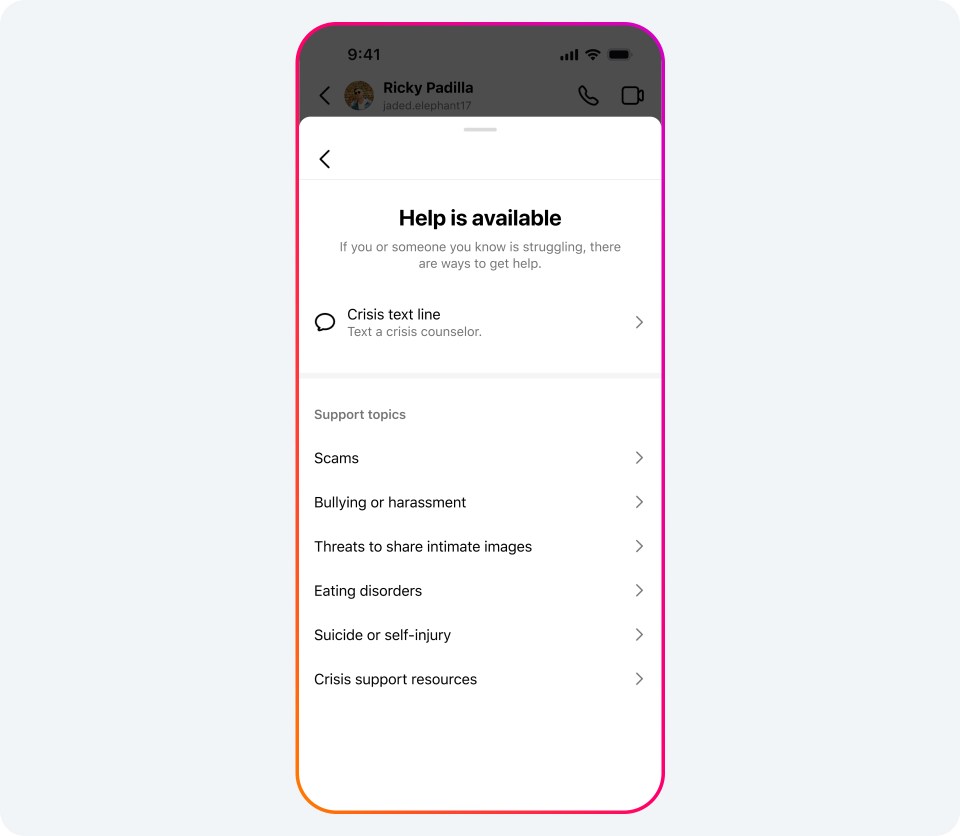

We’re also partnering with Crisis Text Line in the US to provide people with free, 24/7, confidential mental health support when they need it. Now, when people report issues related to sextortion or child safety – such as nudity, threats to share private images or sexual exploitation or solicitation – they’ll see an option to chat live with a volunteer crisis counselor from Crisis Text Line.

Taking Action Against Sextortion Criminals

Last week, we removed over 1,620 assets – 800 Facebook Groups and 820 accounts – that were affiliated with Yahoo Boys and were attempting to organize, recruit and train new sextortion scammers. This comes after we announced in July that we’d removed around 7,200 Facebook assets that were engaging in similar behavior. Yahoo Boys are banned under Meta’s Dangerous Organizations and Individuals policy – one of our strictest policies. While we already aggressively go after violating Yahoo Boys accounts, we’re putting new processes in place which will allow us to identify and remove these accounts more quickly.

We’re constantly working to improve the techniques we use to identify scammers, remove their accounts and stop them from coming back. When our experts observe patterns across sextortion attempts, like certain commonalities between scammers’ profiles, we train our technology to recognize these patterns. This allows us to quickly find and take action against sextortion accounts, and to make significant progress in detecting both new and returning scammers. We’re also sharing aspects of these patterns with the Tech Coalition’s Lantern program, so that other companies can investigate their use on their own platforms.

These updates represent a big step forward in our fight against sextortion scammers, and we’ll continue to evolve our defenses to help protect our community.