Hamas’s promise to broadcast the murder of hostages on its social media sites has school leaders and psychologists concerned about students’ safety.

Copyright 2023 The Associated Press. All rights Reserved.

American and Israeli schools encourage parents to remove their children’s accounts on social media. They do this because they believe that Hamas terrorists could use popular apps as a weapon to spread the news of the brutal killings.

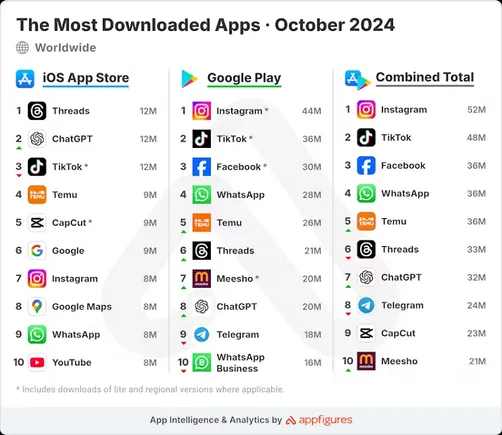

Schools across the United States and Israel are urging parents to ensure their children delete TikTok, Instagram, Facebook and Telegram “immediately” in anticipation of the terrorist group Hamas broadcasting videos executing hostages it has taken in its attack against Israel.

Hamas’ spokesperson warned the group would post pictures of its captured civilians if Israel attacked Gazans without prior warning. White House: In addition to 150 Israelis taken hostage, there may also be at least twenty missing Americans among those held by Hamas. Hamas has been designated as a terrorist group by the U.S. Government, and some of its members have also been declared terrorists.

“Dear Parents: We have been notified that soon there will be videos sent of the hostages begging for their lives,” one school wrote in a memo to families reviewed by SME. “Please delete TikTok from your children’s phones. We cannot allow our children to see them.”

Banner 3

“It’s hard for us, it’s impossible for us, to digest all of the content displayed on various social networks,” said the note, which SMETranslated from Hebrew A separate letter in Hebrew called on parents to delete Facebook and Telegram from their kids’ phones, in addition to TikTok.

In New Jersey, this letter was sent to almost 1,000 parents and students at a private high school.

“Local psychologists have reached out to us and informed us that the Israeli government is urging parents to tell their children to delete Instagram and TikTok immediately,” the principal wrote in an email seen by SME. “We strongly advise our students to do the same as soon as possible. … As one Israeli psychologist noted, ‘The videos and testimonies we are currently exposed to are bigger and crueler than our souls can contain.’”

SMEFor security purposes, the school names have been removed. Scores of parents in Arizona, New York and Canada as well as the U.K., claimed on social media that they too had been given this guidance by schools.

“The videos and testimonies we are currently exposed to are bigger and crueler than our souls can contain.”

Since Hamas waged its surprise attack on Israel on Saturday, gruesome videos of the violence have quickly gone viral on the world’s most widely-used social media platforms, testing the companies’ policies and processes aimed at preventing or removing harmful content. Graphic reels of bloodied women who’d been raped, kidnapped or killed—and whose corpses were then paraded around Gaza as soldiers sat on them and onlookers spit on them—have been easy to find across the major platforms.

News of schools blasting out letters urging families to delete popular apps prompted Senator Rick Scott to call on the platforms to remove troubling posts and accounts that “instill fear and create chaos” and prevent Hamas from monetizing them in any way. “We’ve seen reports of babies savagely beheaded. The parents of children are shot. The elderly dragged through the streets,” Scott said. “Now Iran-backed Hamas wants to inflict more terror by sharing videos of hostages begging for their lives in Gaza. Companies that operate social media MUST act. TikTok, Instagram (Meta), X and every other social media platform have an obligation to stop these terrorists from distributing posts containing violence and murder and collecting financial support for their terror operations.”

TikTok and Meta (which owns Facebook and Instagram) did not immediately respond to requests for comment about the schools’ concerns and how the companies are approaching the possibility that Hamas terrorists may try to weaponize mainstream platforms to spread these violent videos.

TikTok’s policies state that it does not allow “violent and hateful organizations or individuals” on the platform. The policy states that mass murderers, hate or criminal organizations, and violent extremists are all prohibited. Meta also prohibits organizations and individuals “organizing or advocating for violence against civilians… or engaging in systematic criminal operations.” That includes groups designated as terrorist organizations by the U.S. government, such as Hamas, or individuals designated as terrorists. “We remove praise, substantive support, and representation of [these] entities as well as their leaders, founders, or prominent members,” Meta’s rules state.

Politicians are not only concerned with viral videos that show violence. The sites have also spread misinformation regarding the war. This includes deceptive video clips about purported damage, victims and rescues. The misinformation spread because many people on the ground turned to social media for updates, especially X (formerly Twitter).

For example, European commissioner Thierry Breton demanded action from X on Tuesday to address “illegal content and disinformation” being spread in the EU—warning that the company’s moderation (or lack thereof) of this material could violate the bloc’s Digital Services Act. The Digital Services Act of the EU could be violated by the company’s moderation (or lack thereof) of this material. a letter to owner Elon Musk, Breton called on X to be clearer about what’s allowed on the site when it comes to terrorist or violent content and faster to take it down. The platform has been plagued by “fake and manipulated images and facts,” including “repurposed old images of unrelated armed conflicts or military footage that actually originated from video games,” Breton wrote, creating a “risk to public security and civic discourse.” Musk replied that “our policy is that everything is open source and transparent, an approach that I know the EU supports.”

At X, the conflict is arguably the company’s highest-stakes challenge yet since Musk took over Twitter one year ago. Its safety crew said this week that it has seen a surge of active users in the conflict area and that X leaders were deploying “the highest level of response” to protect discourse as the crisis intensifies. This includes the removal of new Hamas accounts, monitoring antisemitic speeches and working with industry anti-terrorism group, Global Internet Forum to Counter Terrorism to track problem trends. Its tolerance of graphic content appears to be high.

“We know that it’s sometimes incredibly difficult to see certain content, especially in moments like the one unfolding,” the safety team’s statement said. “In these situations, X believes that, while difficult, it’s in the public’s interest to understand what’s happening in real time.”

Rina Torchinsky contributed reporting.