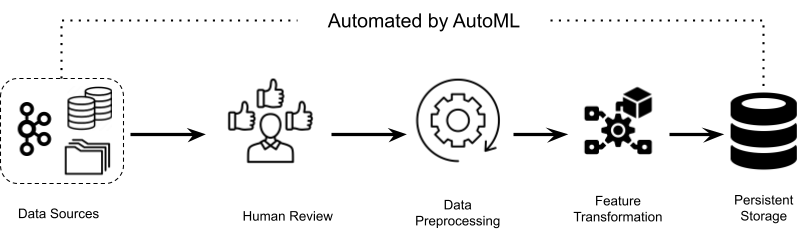

Figure 3: This illustration summarizes how the AutoML framework automates the model training, development, and deployment steps

The AutoML framework trains classifiers, experimenting with multiple model architectures in parallel. It performs a systematic search over a range of hyperparameters, optimization approaches, and models, saving data scientists the effort of trying different algorithms manually.

The AutoML framework also offers an automated process for model evaluation and selection. By taking specified evaluation metrics as input, it systematically assesses multiple trained models, identifying the top-performing model for production deployment.

The framework also automates several other critical steps leading up to deployment. These include the generation of comprehensive reports that evaluate the new model across various key ML metrics. The reports facilitate a detailed comparison with any existing baseline models, aiding in the decision-making process about whether to update the models in production. The framework is also capable of automatically setting the operating threshold, ensuring that the model operates optimally based on specific operational requirements in production settings, like running at a specific precision or recall.

Model deployment

The AutoML framework extends its automation capabilities to include the critical phase of model deployment. This process seamlessly integrates the offline training pipelines with the content moderation production infrastructure. One of the key functions of the framework is enabling the publishing of the newly-trained model to the model artifactory, so that the production machines access these models seamlessly. Moreover, the AutoML framework helps ensure that the model adheres to the contract specifying the expected input and output parameters within the production system. This optimizes the likelihood that the deployed model functions precisely as intended in the production environment, minimizing any potential discrepancies or operational hiccups.

-

Scale: LinkedIn’s AutoML system was built to be widely used across the engineering teams at LinkedIn. One of the major challenges in building such a framework was to streamline data ingestion pipelines to make them scalable across different content sources, such as text, multimedia, and ones that combine both. We also designed AutoML to support the addition of new algorithms to different components such as data-preprocessing, hyperparameter tuning, and metric computation.

-

Optimization: The framework needed to support quick experimentation, large datasets, and multiple modeling architectures in parallel. Lots of effort was put into optimizing for build and runtime, as well as memory, to ensure that developers’ productivity would not be impacted while using the AutoML framework.

-

Usability: With all the components and parameters associated with AutoML, we wanted to ensure that the framework provided an optimal trade-off between ease-of-use and exposing the configuration parameters to developers with different kinds of expertise in ML.

-

Speed and efficiency: Scaling AutoML to all the content moderation classifiers (including Multimodal and Multi-Task Learning based models) at LinkedIn is the primary objective of building the platform. By adopting this technology at a larger scale, we anticipate a substantial rise in the concurrent execution of modeling experiments, leading to a manifold increase in the demand for GPUs and other computational resources. We are working dedicatedly towards improving the efficiency of the system to ensure that it can scale to the growing requirements and minimize the turnaround time for workflow completions.

-

Generative AI: Generative AI has the potential to improve the quality of datasets (for instance, in terms of label noise) as well as generating synthetic datasets for model training. We are exploring these types of solutions that can eventually help improve the accuracy of our classifiers.

-

AI governance: Proper AI governance helps us design and deploy content moderation AI systems in a way that continues to be safe, fair, and transparent. To build additional trust in our content moderation defenses among our members and other stakeholders, we plan to integrate different fairness assessment solutions as part of the AutoML framework.

Acknowledgements

We would like to thank our colleagues Dhanraj Shetty, Bharat Jain, James Verbus, Suhit Sinha, Sumit Srivastava, Akshay Pandya, Prateek Bansal who worked on this project. The project demanded contributions from various other members of the team to make it a big success. Big thanks to Praveen Hegde, Shah Alam, Sakshi Verma, Tushar Deo, Abhishek Maiti, Shivansh Mundra, Abhijit KP, Nivedita Rufus, Akshat Mathur for making significant contributions.

Many thanks to the thought leadership of Grace Tang, Daniel Olmedilla, and our management Vipin Gupta and Smit Marvaniya; to the valuable inputs from Jidnya Shah; and to our TPMs Shreya Mukhopadhyay and Abraham C and for their support, guidance and resources.