AI can be used for good and for evil, and on YouTube, the latter could mean posting videos that mislead viewers into thinking they are real. To avoid those situations, YouTube is now requiring creators to mark videos that are made using AI, and the platform will show labels to viewers.

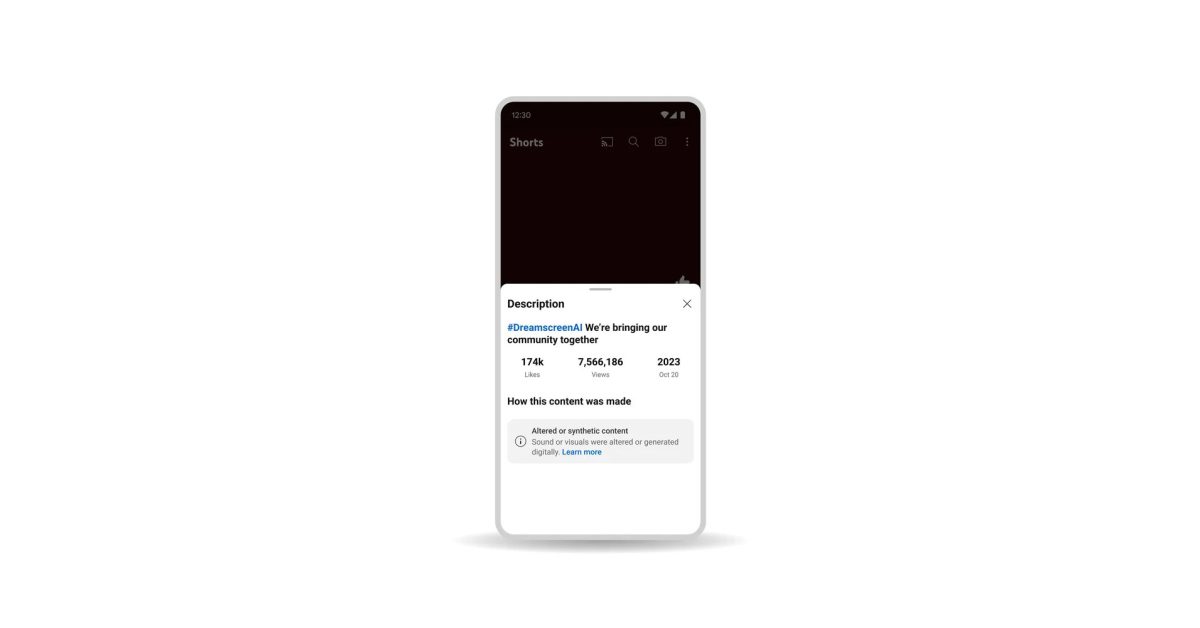

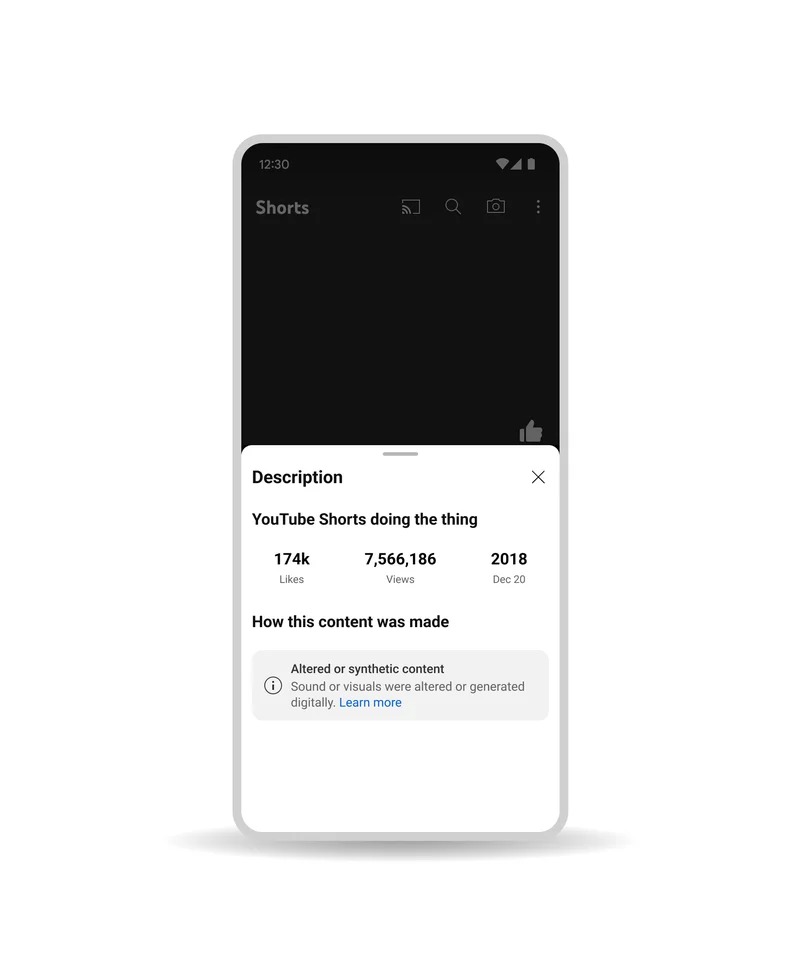

Announced in a blog post today, YouTube will, in the “coming months,” require that creators disclose the use of AI in a video, which will result in a label being shown on videos that are “synthetic.”

While it’s not entirely clear right now where the line will be drawn on what videos will need to disclose the use of AI, it seems pretty broad. YouTube says it will need to be used on material that is “realistic,” whether it is altered by AI or entirely synthetic. The disclosure will be shown both on full-length videos and Shorts.

YouTube explains:

To address this concern, over the coming months, we’ll introduce updates that inform viewers when the content they’re seeing is synthetic. Specifically, we’ll require creators to disclose when they’ve created altered or synthetic content that is realistic, including using AI tools. When creators upload content, we will have new options for them to select to indicate that it contains realistic altered or synthetic material. For example, this could be an AI-generated video that realistically depicts an event that never happened, or content showing someone saying or doing something they didn’t actually do.

It’s further explained that “sensitive topics,” such as elections, ongoing conflicts, health, and more, will show these AI labels more prominently. Creators who are found to consistently fail to mark AI-aided content on YouTube will be subject to content removal as well as suspension from the YouTube Partner Program.

YouTube is also taking a bigger stand against AI-generated music content, such as the fake songs from Drake that went viral earlier this year. Removal requests from music labels and distributors will be coming soon to address this, as YouTube explains:

We’re also introducing the ability for our music partners to request the removal of AI-generated music content that mimics an artist’s unique singing or rapping voice. In determining whether to grant a removal request, we’ll consider factors such as whether content is the subject of news reporting, analysis or critique of the synthetic vocals. These removal requests will be available to labels or distributors who represent artists participating in YouTube’s early AI music experiments. We’ll continue to expand access to additional labels and distributors over the coming months.

More on YouTube:

FTC: We use income earning auto affiliate links. More.