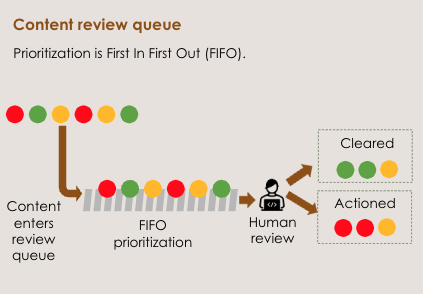

This decision layer for serving the ML models results is integrated within the existing review assignment and prioritization tooling at LinkedIn. The content review queue uses an intelligent review assignment framework which uses these scores and few other static parameters to intelligently prioritize human review items.

Advantages of the new approach

Our legacy framework used high-level static buckets with varied SLAs to prioritize the content in the review queue. However, with this new content review prioritization framework, we are using a new and technically complex approach to review prioritization which has the following hallmarks.

-

This approach to prioritization is completely dynamic where content in the review queue can be moved up or down based on the probability of it being policy-violating.

-

The scores for a piece of content are updated continuously and the final decision between prioritization or de-prioritization is based on the sum total of all of this information instead of just the information available at a single point in time.

-

The probability scores are calculated using AI models, which makes the entire framework probabilistic and provides more flexibility for optimization, as compared to a static framework with rigid rules.

-

The models are triggered at the time a piece of content is reported for review instead of at the time of creation like existing content classification services. As a result, they can make use of additional information that was not available at the time of content creation.

-

This framework also augments the capacity of human reviewers so it can scale non-linearly with the help of automation and machine intelligence, instead of just expanding linearly as we hire more team members. This will enable us to better scale and keep up with the ever growing content and review volumes on LinkedIn.

Impact

This new content review prioritization framework is able to make auto-decisions on ~10% of all queued content at our established (extremely high) precision standard, which is better than the performance of a typical human reviewer. Due to these savings, we are able to reduce the burden on human reviewers, allowing them to focus on content that requires their review due to severity and ambiguity. With the dynamic prioritization of content in the review queue, this framework is also able to reduce the average time taken to catch policy-violating content by ~60%. Due to this faster identification and subsequent removal of such content, we are able to reduce the number of unique members exposed to violative content significantly each week.

Looking ahead

So far, we have rolled out this new content review prioritization framework for feed posts and comments, and we are working to extend its use into other LinkedIn products. We are also leveraging the foundational capabilities of this framework to enhance other review processes, like reviewer assignment. These steps will help us further strengthen our content moderation practices and ensure LinkedIn’s position as among the most trusted professional networks.

Acknowledgements

Multiple teams across LinkedIn have come together to make this framework a reality and make the platform a safer place for our members. Teams including Trust Data Science, Trust ML Infrastructure, Trust Tools Engineering, Transparency Engineering, Trust Product, Trust AI, Trust and Safety, have all contributed to the development of this framework.